Blender and Oculus

The buzz about VR (Virtual Reality) is far from over. And just as with regular stereo 3D movie pipelines, we want to work in VR as soon as possible.

That doesn’t mean necessarily to sculpt, animate, grease-pencil all in VR. But we should at least have a quick way to preview our work in VR – before, after, and during every single of those steps.

At some point we may want special interactions when exploring the scene in VR, but for the scope of this post I will stick to exploring a way to toggle in and out of VR mode.

That raises the question, how do we “see” in VR? Basically we need two “renders” of the scene, each one with a unique projection matrix and a modelview matrix that reflects the head tracking and the in-blender camera transformations.

This should be updated as often as possible (e.g., 75Hz), and are to be updated even if nothing changes in your blender scene (since the head can always move around). Just to be clear, by render here, I mean the same real-time render we see on the viewport.

There are different ways of accomplish this, but I would like to see an addon approach, to make it as flexible as possible to be adapted for new upcoming HMDs.

At this very moment, some of this is doable with the “Virtual Reality Viewport Addon“. I’m using a 3rd-party Python wrapper of the Oculus SDK (generated partly with ctypesgen) that uses ctypes to access the library directly. Some details of this approach:

-

The libraries used are from sdk 0.5 (while Oculus is soon releasing the sdk 0.7)

-

The wrapper was generated by someone else, I’m yet to learn how to

re-create it

Direct mode is not implemented – basically I’m turning multiview on with side-by-side, screen grabbing the viewport, and applying a GLSL shader on it manually

-

The wrapper is not being fully used, the projection matrix and the barrel distortion shaders are poorly done in the addon end

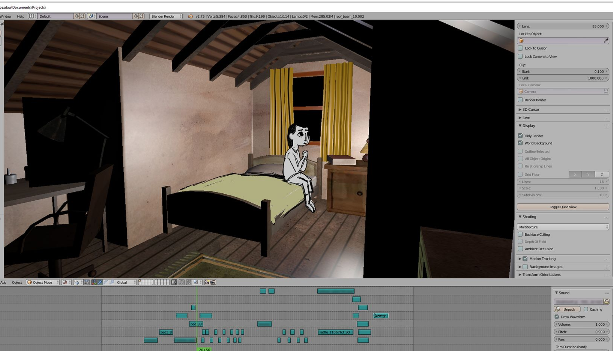

Fig - Virtual Reality Viewport Addon in action – sample scene from Creature Factory 2 by Andy Goralczyk

Not supporting Direct Mode (nor the latest Direct Driver Mode) seems to be a major drawback of this approach (Extended mode is deprecated in the latest SDKs). The positive points are: cross-platform, non-intrusiveness, (potentially) HMD agnostic.

The opposite approach would be to integrate the Oculus SDK directly into Blender. We could create the FBOs, gather the tracking data from the Oculus, force drawing update every time (~75Hz), send the frame to Oculus via Direct Mode. The downsides of this solution:

- License issue – dynamic linking may solve that

- Maintenance burden: If this doesn’t make into master, the branch has to be kept up to date with the latest Blender developments

- Platform specific – which is hard to prevent since the Oculus SDK is Windows

- HMD specific – this solution is tailored towards Oculus only

- Performance as good as you could get

All considered, this is not a bad solution, and it may be the easiest one to implement. In fact, once we go this way, the same solution could be implemented in the Blender Game Engine.

That said I would like to see a compromise. A solution that could eventually be expanded to different HMDs, and other OSs (Operating Systems). Thus the ideal scenario would be to implement it as an addon. I like the idea of using ctypes with the Oculus SDK, but we would still need the following changes in Blender:

- Blender Python API to allow off-screen rendering straight to a FBO bind id

- Blender Python API OpenGL Wrapper to be extended to support glBindFramebuffer among other required GL functions

- Bonus: Blender Game Engine Video Texture ImageRender to support FBO rendering and custom OpenGL matrices

The OpenGL Wrapper change should be straightforward – I’ve done this a few times myself. The main off-screen rendering change may be self-contained enough to be incorporated in Blender without much hassle. The function should receive a projection matrix and a modelview matrix as input, as well as the resolution and the FBO bind id.

The BGE change would be a nice addition and illustrate the strength of this approach. Given that the heavy lift is still being done by C, Python shouldn’t affect the performance much and could work in a game environment as well. The other advantage is that multiple versions of the SDK can be kept, in order to keep maintaining OSX and Linux until a new cross-platform SDK is shipped.

Dalai Felinto, Sept. 2015